Optimizing The Output: How to Properly Use Anonymizers

Within the current digital landscape, optimizing your output when it comes to data extraction or internet automation is essential. One of the most effective ways to enhance your efficiency is by utilizing proxies. Proxies function as middlemen between your device and the internet, allowing you to access content safely while preserving anonymity. If best free proxy checker 2025 scraping data, running various bots, or accessing region-locked content, the right proxy tools can bring all the difference.

In this article discuss various tools and strategies for using proxies efficiently. From proxy scrapers that help you collect lists of usable proxies to proxy checkers that verify their reliability and speed, we aim to cover everything you need to know. Let us also look into the different types of proxies, such as HTTP and SOCKS, and discuss the advantages of dedicated versus shared proxies. By grasping these elements and using the best available tools, you can significantly enhance your web scraping efforts and streamline processes effortlessly.

Grasping Proxy Servers and Their Categories

Proxy servers act as mediators between a individual and the internet, handling requests and responses as they obscuring the user's real IP address. They have various purposes, such as enhancing anonymity, improving safety, and allowing data extraction by overcoming geographical restrictions or barriers. Comprehending how proxies work is essential for efficiently utilizing them in tasks like information gathering and automation.

There are several types of proxies, with Hypertext Transfer Protocol and Socket Secure being the most commonly used. HTTP proxy servers are specifically designed for processing web data, making them perfect for regular browsing and web scraping tasks. SOCKS proxies, on the other hand, process multiple types of data, including electronic mail and FTP, and come in two forms: SOCKS version 4 and SOCKS version 5. The key distinction lies in SOCKS version 5's ability to handle authentication and Transmission Control Protocol, allowing for greater versatile and safe connections.

When selecting a proxy, it's crucial to evaluate the quality and type based on your requirements. Private proxy servers offer exclusive resources for individuals, guaranteeing better performance and privacy. In comparison, public proxies are shared among many users, making them less trustworthy but often available for free. Understanding the differences and applications of each type of proxy aids users make informed decisions for efficient web scraping and automation tasks.

Resources for Harvesting Proxy Servers

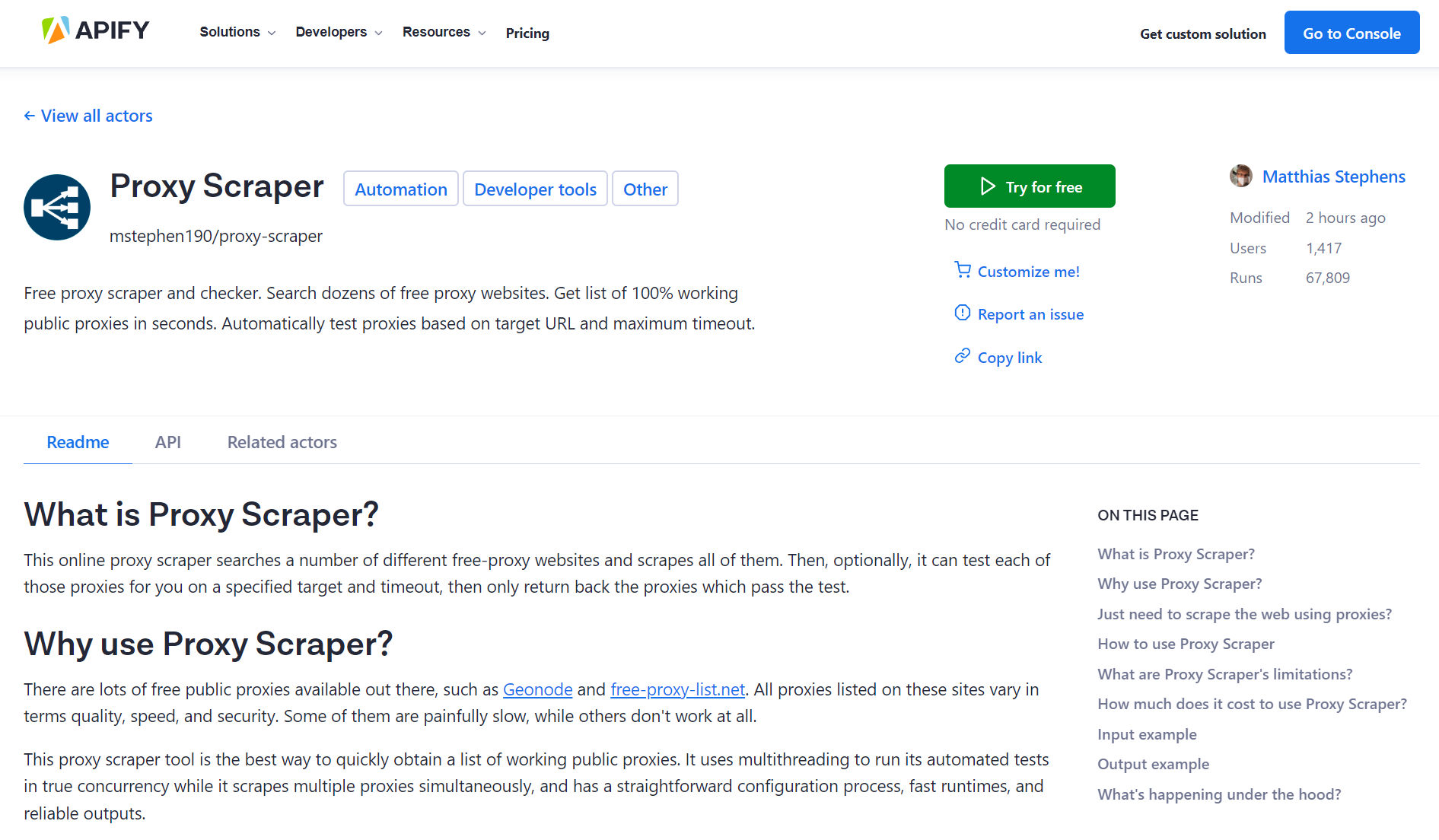

When it comes to gathering proxies, having the suitable instruments is essential for effectiveness and success. A trustworthy proxy collector is fundamental for gathering a diverse list of proxies that can be used for a multitude of aims, such as web data extraction or robotics. Many individuals turn to complimentary proxy scrapers, but it's vital to assess their performance and reliability to make sure that they meet your exact needs. Fast proxy scrapers can speed up the process of collecting proxies, enabling you to increase your results greatly.

Once you've collected a list of possible proxy servers, employing a powerful proxy checker is crucial. The most effective proxy validators not only validate the functionality of each proxy but also evaluate their speed and privacy. Resources like a proxy validation instrument help eliminate unusable proxy servers, enabling you to concentrate on high-quality connections. Many users appreciate services that combine both harvesting and validation features, providing an comprehensive solution for handling proxy servers well.

For those curious in specialized functions, specialized instruments such as ProxyStorm can provide improved options for detecting high-quality proxy servers. Additionally, knowing the distinction between SOCKS4, and SOCKS5 proxies will help you select the best proxy providers for web harvesting and additional tasks. With a good grasp of the top tools to scrape free proxy servers and evaluate their reliability, you'll be ready to move through the realm of proxy application successfully.

Inspecting and Assessing Proxies

When using proxies, confirming their functionality is crucial for best performance. A dependable proxy checker is necessary to check if your proxies are operating as intended. These tools can quickly review through your proxy list, identifying which proxies are active and operational. Fast proxy scrapers and checkers can considerably reduce the time spent in this process, allowing you to concentrate on your web scraping rather than solving non-functional proxies.

In furthermore to basic functionality checks, verifying proxy speed is an additional important factor of proxy management. Tools like FastProxy can give insights into how promptly a proxy replies to requests. This information is essential, especially when dealing with web scraping, where speed can impact the efficiency of data harvesting. For tasks requiring high anonymity or performance, measuring the speed of each proxy will help you select the top options from your array.

An additional key factor in managing proxies is protection and anonymity. Assessing for anonymity status is important to prevent detection when scraping websites. Utilizing a proxy verification tool can assist determine if a proxy is transparent, hidden, or superior. Comprehending the differences between HTTP proxies, SOCKS4 proxies, and SOCKS5 proxies also assists in selecting the right type for your purposes. By merging functionality checks, speed testing, and anonymity evaluations, you can confirm that your proxy configuration is strong and suitable for your web scraping projects.

Using Proxy Services for Web Scraping

Web scraping involves extracting information from websites, but numerous sites have measures to block automated access. Employing proxy servers can assist you bypass these restrictions, making sure that your data extraction tasks operate efficiently. A proxy acts as an intermediary between your scraper and the target web page, allowing you to make requests from different IP locations. This lowers the risk of being blocked or restricted by the site, letting you to gather more data effectively.

When picking proxies for web scraping, it is important to choose between exclusive and open proxy servers. Private proxy servers deliver better performance and anonymity, making them best suited for serious data extraction tasks. Public proxy servers, while often free, can be inconsistent and inefficient, which can deter your data extraction activities. Additionally, using strategies to assess proxy speed and anonymity is important, making sure that you get top-notch, speedy proxies that fulfill your web scraping needs.

Employing the suitable tools can improve your data extraction process. There are many proxy scrapers and validation tools available, addressing various requirements. Rapid proxy scrapers and the top validation tools can help you find and validate proxy servers quickly. By integrating proxy scraping with Python or using dependable proxy list generators online, you can automate the process of gathering high-quality proxy servers to boost your data extraction tasks.

Optimal Strategies for Proxy Efficiency

To maximize efficiency while using proxies, it is vital to grasp the types of proxies available and choose the suitable one for your task. HTTP proxy servers are ideal for web scraping projects, as they can handle regular internet traffic effectively. On the other hand, SOCKS proxies, particularly the SOCKS5 variant, offer superior versatility and support various types of traffic including Transmission Control Protocol and User Datagram Protocol. Assess the requirements of your task and select between private or public proxy servers based on your requirement for security and speed.

Regularly monitoring and validating your proxy servers is important to maintaining maximum performance. Utilize a reliable proxy checker to assess the velocity and privacy of your proxy servers. This will help you spot non-functional or laggy proxies that could interrupt your scraping tasks. Applications like the ProxyStorm service can enhance this task by providing thorough verification capabilities. Ensuring your list of proxies is updated will save time and energy and allow you to concentrate on your main objectives.

Lastly, take advantage of automation to improve your proxy management. Incorporating a proxy extraction tool into your workflow can expedite the acquisition of updated lists of proxies and ensure that you are always operating with premium proxies. Combining this with a proxy verification tool will further streamline processes, allowing you to automate the task of locating and evaluating proxy servers efficiently. This not only increases your proficiency but also reduces the labor-intensive burden associated with handling proxy servers.

Free vs Paid Proxy Choices

When deciding between no-cost and premium proxy choices, it's crucial to grasp the compromises involved. Complimentary proxies often draw in users due to their cost-effectiveness, making them attractive for informal web browsing or minor projects. However, these proxies come with significant drawbacks, including unstable connections, slower speeds, and possible security risks since they are often used by many users. Additionally, complimentary proxies may not offer anonymity, making them inappropriate for delicate tasks like web scraping or automation.

On the other hand, commercial proxies typically provide a higher level of reliability and consistency. They are often more protected, offering private servers that ensure quicker speeds and greater uptime. Paid options also usually come with capabilities like proxy verification tools and enhanced customer support, making them suitable for businesses that rely on steady performance for data extraction and web scraping. Moreover, top-tier offers often include entry to a selection of proxy types, such as HTTP, SOCKS4, and SOCKS5, allowing users to select the right proxy for their particular needs.

Ultimately, the selection between no-cost and commercial proxies depends on the user's criteria. For those engaging in significant web scraping, automation, or requiring reliable confidentiality, investing in top-notch paid proxies is a smarter choice. Conversely, if the requirements are limited or trial, free proxy choices may be sufficient. Assessing the specific use case and grasping the advantages of each option can help users make the correct choice for their proxy utilization.

Automation and Proxy Utilization

Proxy servers play a vital role in automation, enabling users to carry out tasks such as data extraction, data harvesting, and SEO analysis without being halted or limited. By incorporating a trustworthy proxy scraper into your routine, you can effectively gather large amounts of data while lowering the risk of detection by sites. Automation allows for the simultaneous execution of multiple tasks, and premium proxies ensure that these tasks continue seamless and effective.

When it comes to automating processes, understanding the distinction between private and public proxies is essential. Dedicated proxies offer improved anonymity and stability, making them ideal for automated tasks requiring consistent performance. In contrast, free proxies, while more available and often free, can be unpredictable in terms of performance and dependability. Selecting the right type of proxy based on the type of your automated processes is essential for maximizing efficiency.

Lastly, utilizing advanced proxy validation tools improves your automated processes strategy by ensuring that only functional and fast proxies are utilized.

Fast proxy scrapers and checkers can confirm the speed and anonymity of proxies in real-time, allowing you to keep a elevated level of performance in automating tasks. By carefully selecting and regularly testing your proxy list, you can further enhance your automated processes for improved data extraction outcomes.